A Samsung RKP Compendium

Post by Alexandre Adamski

This first goal of this blog post is to serve as a comprehensive reference of Samsung RKP's inner workings. It enables anyone to start poking at this obscure code that is executing at a high privilege level on their device. Our explanations are often accompanied by snippets of decompiled code that you can feel free to skip over.

The second goal, and maybe of interest to more people, is to reveal a now-fixed vulnerability that allows getting code execution at EL2 in Samsung RKP. It is a good example of a simple mistake that compromises platform security as the exploit consists of a single call that allows getting hypervisor memory writable at EL1.

In the first part, we will talk briefly about Samsung's kernel mitigations (that would probably deserve a blog post of their own). In the second part, we will explain how to get your hand on the RKP binary for your device.

In the third part, we will start taking apart the hypervisor framework that supports RKP on the Exynos devices, before digging into the internals of RKP in the fourth part. We will detail how it is started, how it processes the kernel pages tables, how it protects sensitive data structures, and finally how it enables the kernel mitigations.

In the fifth and last part, we will reveal the vulnerability, the one-liner exploit and take a look at the patch.

Table Of Contents

- Introduction

- Kernel Exploitation

- Getting Started

- Hypervisor Framework

- Digging Into RKP

- Vulnerability

- Conclusion

- References

Introduction

In the mobile device world, security traditionally relied on kernel mechanisms. But history has shown us that the kernel was far from being unbreakable. For most Android devices, finding a kernel vulnerability allows an attacker to modify sensitive kernel data structures, elevate privileges and execute malicious code.

It is also simply not enough to ensure kernel integrity at boot time (using the Verified Boot mechanism). Kernel integrity must also be verified at run time. This is what a security hypervisor aims to do. RKP, or "Real-time Kernel Protection", is the name of Samsung's hypervisor implementation which is part of Samsung KNOX.

There is already a lot of great research that has been done on Samsung RKP, specifically Gal Beniamini's Lifting the (Hyper) Visor: Bypassing Samsung’s Real-Time Kernel Protection and Aris Thallas's On emulating hypervisors: a Samsung RKP case study which we both highly recommend that you read before this blog post.

Kernel Exploitation

A typical local privilege escalation (LPE) flow on Android involves:

- bypassing KASLR by leaking a kernel pointer;

- getting a one-off arbitrary kernel memory read/write;

- using it to overwrite a kernel function pointer;

- calling a function to set the

address_limit to -1;

- bypassing SELinux by writing

selinux_(enable|enforcing);

- escalating privileges by writing the

uid, gid, sid, capabilities, etc.

Samsung has implemented mitigations to try and make that task as hard as possible for an attacker: JOPP, ROPP and KDP are three of them. Not all Samsung devices have the same mitigations in place though.

Here is what we observed after downloading various firmware updates:

Device

Region

JOPP

ROPP

KDP

Low-end

International

No

No

Yes

Low-end

United States

No

No

Yes

High-end

International

Yes

No

Yes

High-end

United States

Yes

Yes

Yes

JOPP

Jump-Oriented Programming Prevention (JOPP) aims to prevent JOP. It is a homemade CFI solution. It first uses a modified compiler toolchain to place a NOP instruction before each function start. It then uses a Python script (Kernel/scripts/rkp_cfp/instrument.py) to process the compiled kernel binary and replace NOPs with a magic value (0xbe7bad) and indirect branches with a direct branch to a helper function.

The helper function jopp_springboard_blr_rX (in Kernel/init/rkp_cfp.S) will check if the value before the target matches the magic value and take the jump if it does, or crash if it doesn't:

.macro springboard_blr, reg

jopp_springboard_blr_\reg:

push RRX, xzr

ldr RRX_32, [\reg, #-4]

subs RRX_32, RRX_32, #0xbe7, lsl #12

cmp RRX_32, #0xbad

b.eq 1f

...

inst 0xdeadc0de //crash for sure

...

1:

pop RRX, xzr

br \reg

.endm

ROPP

Return-Oriented Programming Prevention (ROPP) aims to prevent ROP. It is a homemade "stack canary". It uses the same modified compiler toolchain to emit NOP instructions before stp x29, x30 instructions and after ldp x29, x30 instructions, and to prevent allocation of registers X16 and X17. It then uses the same Python script to replace the prologues and epilogues of assembled C functions like so:

nop

stp x29, x30, [sp,#-<frame>]!

(insns)

ldp x29, x30, ...

nop

is replaced with

eor RRX, x30, RRK

stp x29, RRX, [sp,#-<frame>]!

(insns)

ldp x29, RRX, ...

eor x30, RRX, RRK

where RRX is an alias for X16 and RRK for X17.

RRK is called the "thread key" and is unique to each kernel task. So instead of pushing directly the return address on the stack, they are XORing it first with this key, preventing an attacker from changing the return address without knowledge of the thread key.

The thread key itself is stored in the rrk field of the thread_info structure, but XORed with the RRMK.

struct thread_info {

// ...

unsigned long rrk;

};

RRMK is called the "master key". On production devices, it is stored in the DBGBCR5_EL1 (Debug Breakpoint Value Register 5) system register. It is set by the hypervisor during kernel initialization as we will see later.

KDP

Kernel Data Protection (KDP) is another hypervisor-enabled mitigation. It is a homemade Data Flow Integrity (DFI) solution. Its makes sensitive kernel data structures (like the page tables, struct cred, struct task_security_struct, struct vfsmount, SELinux status, etc.) read-only thanks to the hypervisor.

Getting Started

Hypervisor Crash Course

For understanding Samsung RKP, you will need some basic knowledge about the virtualization extensions on ARMv8 platforms. We recommend that you read the section "HYP 101" of Lifting the (Hyper) Visor or the section "ARM Architecture & Virtualization Extensions" of On emulating hypervisors.

To paraphrase these chapters, a hypervisor is executing at a higher privilege level than the kernel, so that gives it complete control over it. Here is the architecture looks like on ARMv8 platforms:

The hypervisor can receive calls from the kernel via the HyperVisor Call (HVC) instruction. Moreover, by using the Hypervisor Configuration Register (HCR), the hypervisor can trap critical operations usually handled by the kernel (access to virtual memory control registers, etc.) and also handle general exceptions.

Finally, the hypervisor is taking advantage of a second layer of address translation, called "stage 2 translation". In the standard "stage 1 translation", a Virtual Address (VA) is translated into Intermediate Physical Address (IPA). Then this IPA is translated into the final final Physical Address (PA) by the second stage.

Here is what the address translation looks like with 2-stage address translation enabled:

The hypervisor still only has a single stage address translation for its own memory accesses.

Our Research Platform

To make it easier to get started with this research, we have been using a bootloader unlocked Samsung A51 (SM-A515F) instead of a full exploit chain. We have downloaded the kernel source code for our device on the Samsung Open Source website, modified them and recompiled them (that did not work out of the box).

For this research, we have implemented new syscalls:

- kernel memory allocation/freeing;

- arbitrary read/write of kernel memory;

- hypervisor call (using the uh_call function).

These syscalls makes it really convenient to interact with RKP as you will see in the exploitation section: we just need to write a piece of C code (or Python) that will execute in userland and perform whatever we want.

Extracting The Binary

RKP is implemented for both Exynos and Snapdragon-equipped devices, and both implementations share a lot of code. However, most if not all of the existing research has been done on the Exynos variant, as it is the most straightforward to dig into: RKP is available as a standalone binary. On Snapdragon devices, it is embedded inside the Qualcomm Hypervisor Execution Environment (QHEE) image which is very large and complicated.

Exynos Devices

On Exynos devices, RKP used to be embedded directly into the kernel binary and so it could be found as the vmm.elf file in the kernel source archives. Around late 2017/early 2018, VMM was rewritten into a new framework called uH, which most likely stands for "micro-hypervisor". Consequently, the binary has been renamed to uh.elf and can still be found in the kernel source archives for a few devices.

Because of Gal Beniamini's first suggested design improvements, on most devices RKP has been moved out of the kernel binary and into a partition of its own called uh. That makes it even easier to extract, for example by grabbing it from the BL_xxx.tar archive contained in a firmware update (it is usually LZ4-compressed and starts with a 0x1000 bytes header that needs to be stripped to get to the real ELF file).

The architecture has changed slightly on the S20 and later devices, as Samsung has introduced another framework to support RKP (called H-Arx), most likely to unify even more the code base with the Snapdragon devices, and it also features more uH "apps". However, we won't be taking a look at it in this blog post.

Snapdragon Devices

On Snapdragon devices, RKP can be found in the hyp partition, and can also be extracted from the BL_xxx.tar archive in a firmware update. It is one of the segments that make up the QHEE image.

The main difference with Exynos devices is that it is QHEE that sets the page tables and the exception vector. As a result, it is QHEE that notifies uH when exceptions happen (HVC or trapped system register), and uH has to make a call to QHEE when it wants to modify the page tables. The rest of the code is almost identical.

Symbols/Log Strings

Back in 2017, the RKP binary was shipped with symbols and log strings. But that isn't the case anymore. Nowadays the binaries are stripped, and the log strings are replaced with placeholders (like Qualcomm does). Nevertheless, we tried getting our hands on as many binaries as possible, hoping that Samsung did not do that for all of their devices, as it is sometimes the case with other OEMs.

By mass downloading firmware updates for various Exynos devices, we gathered around ~300 unique hypervisor binaries. None of the uh.elf files had symbols, so we had to manually port them over from the old vmm.elf files. Some of the uh.elf files had the full log strings, the latest one being from Apr 9 2019.

With the full log strings and their hashed version, we could figure out that the hash value is the simply the truncation of SHA256's output. Here is a Python one-liner to calculate the hash in case you need it:

hashlib.sha256(log_string).hexdigest()[:8]

Hypervisor Framework

The uH framework acts as a micro-OS, of which RKP is an application. This is really more of a way to organize things as "apps" are simply a bunch of commands handlers and don't have any kind of isolation.

Utility Structures

Before digging into the code, we will briefly tell you about the utility structures that are used extensively by uH and the RKP app. We won't be detailing their implementation, but it is important to understand what they do.

Memlists

The memlist_t structure is defined like so (names are our own):

typedef struct memlist {

memlist_entry_t *base;

uint32_t capacity;

uint32_t count;

uint32_t merged;

crit_sec_t cs;

} memlist_t;

It is a list of address ranges, a sort of specialized version of a C++ vector (it has a capacity and a size).

The memlist entries are defined like so:

typedef struct memlist_entry {

uint64_t addr;

uint64_t size;

uint64_t field_10;

uint64_t extra;

} memlist_entry_t;

There are utility functions to add and remove ranges from a memlist, to check if an address is contained in a memlist, or if a range overlaps with a memlist, etc.

Sparsemaps

The sparsemap_t structure is defined like so (names are our own):

typedef struct sparsemap {

char name[8];

uint64_t start_addr;

uint64_t end_addr;

uint64_t count;

uint64_t bit_per_page;

uint64_t mask;

crit_sec_t cs;

memlist_t *list;

sparsemap_entry_t *entries;

uint32_t private;

uint32_t field_54;

} sparsemap_t;

The sparsemap is a map that associates values to addresses. It is created from a memlist and will map all the addresses in this memlist to a value. The size of this value is determined by the bit_per_page field.

The sparsemap entries are defined like so:

typedef struct sparsemap_entry {

uint64_t addr;

uint64_t size;

uint64_t bitmap_size;

uint8_t* bitmap;

} sparsemap_entry_t;

There are functions to get and set the value for each entry of the map, etc.

Critical Sections

The crit_sec_t structure is used to implement critical sections (names are our own):

typedef struct crit_sec {

uint32_t cpu;

uint32_t lock;

uint64_t lr;

} crit_sec_t;

And of course, there are functions to enter and exit the critical sections.

System Initialization

uH/RKP is loaded into memory by S-Boot (Samsung Bootloader). S-Boot jumps to the EL2 entry-point by asking the secure monitor (running at EL3) to start executing hypervisor code at the address it specifies.

uint64_t cmd_load_hypervisor() {

// ...

part = FindPartitionByName("UH");

if (part) {

dprintf("%s: loading uH image from %d..\n", "f_load_hypervisor", part->block_offset);

ReadPartition(&hdr, part->file_offset, part->block_offset, 0x4C);

dprintf("[uH] uh page size = 0x%x\n", (((hdr.size - 1) >> 12) + 1) << 12);

total_size = hdr.size + 0x1210;

dprintf("[uH] uh total load size = 0x%x\n", total_size);

if (total_size > 0x200000 || hdr.size > 0x1FEDF0) {

dprintf("Could not do normal boot.(invalid uH length)\n");

// ...

}

ret = memcmp_s(&hdr, "GREENTEA", 8);

if (ret) {

ret = -1;

dprintf("Could not do uh load. (invalid magic)\n");

// ...

} else {

ReadPartition(0x86FFF000, part->file_offset, part->block_offset, total_size);

ret = pit_check_signature(part->partition_name, 0x86FFF000, total_size);

if (ret) {

dprintf("Could not do uh load. (invalid signing) %x\n", ret);

// ...

}

load_hypervisor(0xC2000400, 0x87001000, 0x2000, 1, 0x87000000, 0x100000);

dprintf("[uH] load hypervisor\n");

}

} else {

ret = -1;

dprintf("Could not load uH. (invalid ppi)\n");

// ...

}

return ret;

}

void load_hypervisor(...) {

dsb();

asm("smc #0");

isb();

}

Note: On recent Samsung devices, the monitor code (based on ATF - ARM Trusted Firmware) is not longer in plain-text in the S-Boot binary. In its place can be found an encrypted blob. A vulnerability in Samsung's Trusted OS implementation (TEEGRIS) we will need to be found so that plain-text monitor code can be dumped.

void default(...) {

// ...

if (get_current_el() == 8) {

// Save registers x0 to x30, sp_el1, elr_el2, spsr_el2

// ...

memset(&rkp_bss_start, 0, 0x1000);

main(saved_regs.x0, saved_regs.x1, &saved_regs);

}

asm("smc #0");

}

Execution starts in the default function. This function checks if it is running at EL2 before calling main. Once main returns, it makes an SMC, presumably to give the control back to S-Boot.

int32_t main(int64_t x0, int64_t x1, saved_regs_t* regs) {

// ...

// Setting A=0 (Alignment fault checking disabled)

// SA=0 (SP Alignment check disabled)

set_sctlr_el2(get_sctlr_el2() & 0xFFFFFFF5);

if (!initialized) {

initialized = 1;

// Check if loading address is as expected

if (&hyp_base != 0x87000000) {

uh_log('L', "slsi_main.c", 326, "[-] static s1 mmu mismatch");

return -1;

}

set_ttbr0_el2(&static_s1_page_tables_start__);

s1_enable();

uh_init(0x87000000, 0x200000);

if (vmm_init())

return -1;

uh_log('L', "slsi_main.c", 338, "[+] vmm initialized");

set_vttbr_el2(&static_s2_page_tables_start__);

uh_log('L', "slsi_main.c", 348, "[+] static s2 mmu initialized");

s2_enable();

uh_log('L', "slsi_main.c", 351, "[+] static s2 mmu enabled");

}

uh_log('L', "slsi_main.c", 355, "[*] initialization completed");

return 0;

}

After disabling the alignment checks and making sure the binary is loaded at the expected address (0x87000000 for this binary), main sets TTBR0_EL2 to its initial page tables and calls s1_enable.

void s1_enable() {

// ...

cs_init(&s1_lock);

// Setting Attr0=0xff (Normal memory, Outer & Inner Write-Back Non-transient,

// Outer & Inner Read-Allocate Write-Allocate)

// Attr1=0x00 (Device-nGnRnE memory)

// Attr2=0x44 (Normal memory, Outer & Inner Write-Back Transient,

// Outer & Inner No Read-Allocate No Write-Allocate)

set_mair_el2(get_mair_el2() & 0xFFFFFFFFFF000000 | 0x4400FF);

// Setting T0SZ=24 (TTBR0_EL2 region size is 2^40)

// IRGN0=0b11 && ORGN0=0b11

// (Normal memory, Outer & Inner Write-Back

// Read-Allocate No Write-Allocate Cacheable)

// SH0=0b11 (Inner Shareable)

// PAS=0b010 (PA size is 40 bits, 1TB)

set_tcr_el2(get_tcr_el2(); &0xFFF8C0C0 | 0x23F18);

flush_entire_cache();

sctlr_el2 = get_sctlr_el2();

// Setting C=1 (data is cacheable for EL2)

// I=1 (instruction access is cacheable for EL2)

// WXN=1 (writeable implies non-executable for EL2)

set_sctlr_el2(sctlr_el2 & 0xFFF7EFFB | 0x81004);

invalidate_entire_s1_el2_tlb();

// Setting M=1 (EL2 stage 1 address translation enabled)

set_sctlr_el2(sctlr_el2 & 0xFFF7EFFA | 0x81005);

}

s1_enable sets mostly cache-related fields of MAIR_EL2, TCR_EL2 and SCTLR_EL2, and most importantly enables the MMU for the EL2. main then calls the uh_init function and passes it the uH memory range.

We can already see that Gal Beniamini's second suggested design improvement, setting the WXN bit to 1, has also been implemented by the Samsung KNOX team.

int64_t uh_init(int64_t uh_base, int64_t uh_size) {

// ...

memset(&uh_state.base, 0, sizeof(uh_state));

uh_state.base = uh_base;

uh_state.size = uh_size;

static_heap_initialize(uh_base, uh_size);

if (!static_heap_remove_range(0x87100000, 0x40000) || !static_heap_remove_range(&hyp_base, 0x87046000 - &hyp_base) ||

!static_heap_remove_range(0x870FF000, 0x1000)) {

uh_panic();

}

memory_init();

uh_log('L', "main.c", 131, "================================= LOG FORMAT =================================");

uh_log('L', "main.c", 132, "[LOG:L, WARN: W, ERR: E, DIE:D][Core Num: Log Line Num][File Name:Code Line]");

uh_log('L', "main.c", 133, "==============================================================================");

uh_log('L', "main.c", 134, "[+] uH base: 0x%p, size: 0x%lx", uh_state.base, uh_state.size);

uh_log('L', "main.c", 135, "[+] log base: 0x%p, size: 0x%x", 0x87100000, 0x40000);

uh_log('L', "main.c", 137, "[+] code base: 0x%p, size: 0x%p", &hyp_base, 0x46000);

uh_log('L', "main.c", 139, "[+] stack base: 0x%p, size: 0x%p", stacks, 0x10000);

uh_log('L', "main.c", 143, "[+] bigdata base: 0x%p, size: 0x%p", 0x870FFC40, 0x3C0);

uh_log('L', "main.c", 152, "[+] date: %s, time: %s", "Feb 27 2020", "17:28:58");

uh_log('L', "main.c", 153, "[+] version: %s", "UH64_3b7c7d4f exynos9610");

uh_register_commands(0, init_cmds, 0, 5, 1);

j_rkp_register_commands();

uh_log('L', "main.c", 370, "%d app started", 1);

system_init();

apps_init();

uh_init_bigdata();

uh_init_context();

memlist_init(&uh_state.dynamic_regions);

pa_restrict_init();

uh_state.inited = 1;

uh_log('L', "main.c", 427, "[+] uH initialized");

return 0;

After saving the arguments into a global control structure we named uh_state, uh_init calls static_heap_initialize. This function saves its arguments into global variables, and also initialize the doubly linked list of heap chunks with a single free chunk spanning over the whole uH static memory range.

uh_init then calls heap_remove_range to remove 3 important ranges from the memory that can be returned by the static heap allocator (effectively splitting the original chunk into multiples ones):

- the log region;

- the uH (code/data/bss/stack) region;

- the "bigdata" (analytics) region.

uh_init then calls memory_init.

int64_t memory_init() {

memory_buffer = 0x87100000;

memset(0x87100000, 0, 0x40000);

cs_init(&memory_cs);

clean_invalidate_data_cache_region(0x87100000, 0x40000);

memory_buffer_index = 0;

memory_active = 1;

return s1_map(0x87100000, 0x40000, UNKN3 | WRITE | READ);

}

This function zeroes out the log region and maps it into the EL2 page tables. This region will be used by the *printf string printing functions which are called inside of the uh_log function.

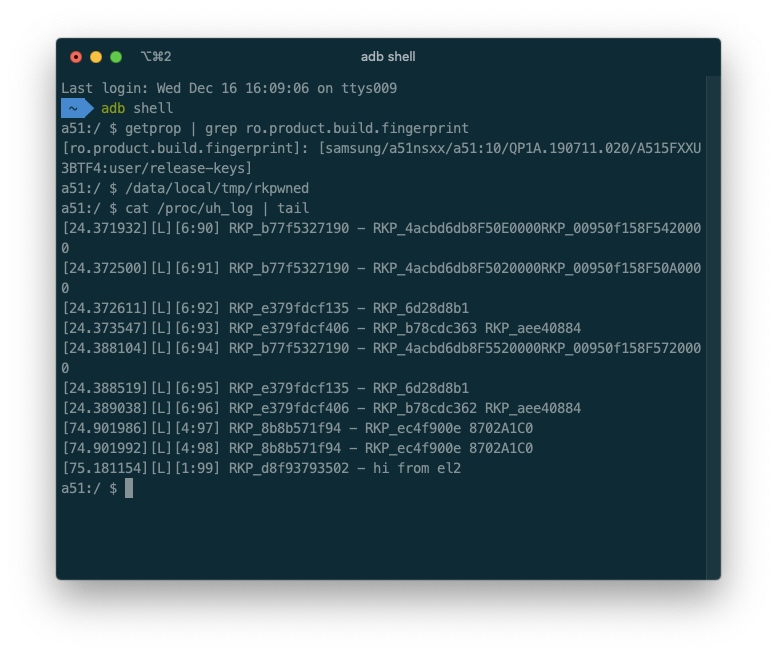

uh_init then logs various informations using uh_log (these messages can be retrieved from /proc/uh_log on the device). uh_init then calls uh_register_commands and rkp_register_commands (which also calls uh_register_commands but with a different set of arguments).

int64_t uh_register_commands(uint32_t app_id,

int64_t cmd_array,

int64_t cmd_checker,

uint32_t cmd_count,

uint32_t flag) {

// ...

if (uh_state.inited)

uh_log('D', "event.c", 11, "uh_register_event is not permitted after uh_init : %d", app_id);

if (app_id >= 8)

uh_log('D', "event.c", 14, "wrong app_id %d", app_id);

uh_state.cmd_evtable[app_id] = cmd_array;

uh_state.cmd_checkers[app_id] = cmd_checker;

uh_state.cmd_counts[app_id] = cmd_count;

uh_state.cmd_flags[app_ip] = flag;

uh_log('L', "event.c", 21, "app_id:%d, %d events and flag(%d) has registered", app_id, cmd_count, flag);

if (cmd_checker)

uh_log('L', "event.c", 24, "app_id:%d, cmd checker enforced", app_id);

return 0;

}

uh_register_commands takes as arguments the application ID, an array of command handlers, an optional command "checker" function, the number of commands in the array, and a debug flag. These values will be stored in the fields cmd_evtable, cmd_checkers, cmd_counts and cmd_flags of the uh_state structure.

According to the kernel sources, there are only 3 applications defined, even though uH supports up to 8.

// from include/linux/uh.h

#define APP_INIT 0

#define APP_SAMPLE 1

#define APP_RKP 2

#define UH_PREFIX UL(0xc300c000)

#define UH_APPID(APP_ID) ((UL(APP_ID) & UL(0xFF)) | UH_PREFIX)

enum __UH_APP_ID {

UH_APP_INIT = UH_APPID(APP_INIT),

UH_APP_SAMPLE = UH_APPID(APP_SAMPLE),

UH_APP_RKP = UH_APPID(APP_RKP),

};

uh_init then calls system_init and apps_init.

uint64_t system_init() {

// ...

memset(&saved_regs, 0, sizeof(saved_regs));

res = uh_handle_command(0, 0, &saved_regs);

if (res)

uh_log('D', "main.c", 380, "system init failed %d", res);

return res;

}

uint64_t apps_init() {

// ...

memset(&saved_regs, 0, sizeof(saved_regs));

for (i = 1; i != 8; ++i) {

if (uh_state.cmd_evtable[i]) {

uh_log('W', "main.c", 393, "[+] dst %d initialized", i);

res = uh_handle_command(i, 0, &saved_regs);

if (res)

uh_log('D', "main.c", 396, "app init failed %d", res);

}

}

return res;

}

These functions call the command #0, system_init of APP_INIT, and apps_init of all the other registered applications. In our case, it will end up calling init_cmd_init and rkp_cmd_init as we will see later.

int64_t uh_handle_command(uint64_t app_id, uint64_t cmd_id, saved_regs_t* regs) {

// ...

if ((uh_state.cmd_flags[app_id] & 1) != 0)

uh_log('L', "main.c", 441, "event received %lx %lx %lx %lx %lx %lx", app_id, cmd_id, regs->x2, regs->x3, regs->x4,

regs->x5);

cmd_checker = uh_state.cmd_checkers[app_id];

if (cmd_id && cmd_checker && cmd_checker(cmd_id)) {

uh_log('E', "main.c", 448, "cmd check failed %d %d", app_id, cmd_id);

return -1;

}

if (app_id >= 8)

uh_log('D', "main.c", 453, "wrong dst %d", app_id);

if (!uh_state.cmd_evtable[app_id])

uh_log('D', "main.c", 456, "dst %d evtable is NULL\n", app_id);

if (cmd_id >= uh_state.cmd_counts[app_id])

uh_log('D', "main.c", 459, "wrong type %lx %lx", app_id, cmd_id);

cmd_handler = uh_state.cmd_evtable[app_id][cmd_id];

if (!cmd_handler) {

uh_log('D', "main.c", 464, "no handler %lx %lx", app_id, cmd_id);

return -1;

}

return cmd_handler(regs);

}

uh_handle_command prints the app ID, command ID and its arguments if the debug flag was set, calls the command checker function if specified, then calls the appropriate command handler.

uh_init then calls uh_init_bigdata and uh_init_context.

int64_t uh_init_bigdata() {

if (!bigdata_state)

bigdata_state = malloc(0x230, 0);

memset(0x870FFC40, 0, 960);

memset(bigdata_state, 0, 560);

return s1_map(0x870FF000, 0x1000, UNKN3 | WRITE | READ);

}

uh_init_bigdata allocates and zeroes out the buffers used by the analytics feature. It also makes the bigdata region accessible as read and write in the EL2 page tables.

int64_t* uh_init_context() {

// ...

uh_context = malloc(0x1000, 0);

if (!uh_context)

uh_log('W', "RKP_1cae4f3b", 21, "%s RKP_148c665c", "uh_init_context");

return memset(uh_context, 0, 0x1000);

}

uh_init_context allocates and zeroes out a buffer that is used to store the hypervisor registers on platform resets (we don't know where it is used, maybe by the monitor to restore the hypervisor state on some event).

uh_init calls memlist_init to initialize the dynamic_regions memlist in the uh_state structure, and then calls the pa_restrict_init function.

int64_t pa_restrict_init() {

memlist_init(&protected_ranges);

memlist_add(&protected_ranges, 0x87000000, 0x200000);

if (!memlist_contains_addr(&protected_ranges, rkp_cmd_counts))

uh_log('D', "pa_restrict.c", 79, "Error, cmd_cnt not within protected range, cmd_cnt addr : %lx", rkp_cmd_counts);

if (!memlist_contains_addr(&protected_ranges, (uint64_t)&protected_ranges))

uh_log('D', "pa_restrict.c", 84, "Error protect_ranges not within protected range, protect_ranges addr : %lx",

&protected_ranges);

return uh_log('L', "pa_restrict.c", 87, "[+] uH PA Restrict Init");

}

pa_restrict_init initializes the protected_ranges memlist, and adds the uH memory region to it. It also checks that rkp_cmd_counts and the protected_ranges structures are contained in the memlist.

uh_init returns to main, which then calls vmm_init.

int64_t vmm_init() {

// ...

uh_log('L', "vmm.c", 142, ">>vmm_init<<");

cs_init(&stru_870355E8);

cs_init(&panic_cs);

set_vbar_el2(&vmm_vector_table);

// Setting TVM=1 (EL1 write accesses to the specified EL1 virtual

// memory control registers are trapped to EL2)

hcr_el2 = get_hcr_el2() | 0x4000000;

uh_log('L', "vmm.c", 161, "RKP_398bc59b %x", hcr_el2);

set_hcr_el2(hcr_el2);

return 0;

}

vmm_init sets the VBAR_EL2 register to the exception vector used by the hypervisor and enable trapping of writes to the virtual memory control registers at EL1.

uh_init then sets the VTTBR_EL2 register to the initial pages tables that will be used for the second stage address translation for memory access at EL1. Finally, before returning it calls s2_enable.

void s2_enable() {

// ...

cs_init(&s2_lock);

// Setting T0SZ=24 (VTTBR_EL2 region size is 2^40)

// SL0=0b01 (Stage 2 translation lookup start at level 1)

// IRGN0=0b11 && ORGN0=0b11

// (Normal memory, Outer & Inner Write-Back

// Read-Allocate No Write-Allocate Cacheable)

// SH0=0b11 (Inner Shareable)

// TG0=0b00 (Granule size is 4KB)

// PS=0b010 (PA size is 40 bits, 1TB)

set_vtcr_el2(get_vtcr_el2() & 0xFFF80000 | 0x23F58);

invalidate_entire_s1_s2_el1_tlb();

// Setting VM=1

set_hcr_el2(get_hcr_el2() | 1);

lock_start = 1;

}

s2_enable configures the second stage address translation registers and enables it.

App Initialization

We mentioned that uh_init calls the command #0 for each of the registered applications. Let's see what is being executed for the two applications APP_INIT (some kind of init process equivalent) and APP_RKP.

APP_INIT

The command handlers registered for APP_INIT are:

Command ID

Command Handler

Maximum Calls

0x00

init_cmd_init-

0x02

init_cmd_add_dynamic_region-

0x03

init_cmd_id_0x03-

0x04

init_cmd_initialize_dynamic_heap-

Let's take a look at command #0 called during startup by uH.

int64_t init_cmd_init(saved_regs_t* regs) {

// ...

if (!uh_state.fault_handler && regs->x2) {

uh_state.fault_handler = rkp_get_pa(regs->x2);

uh_log('L', "main.c", 161, "[*] uH fault handler has been registered");

}

return 0;

}

The command #0 handler of APP_INIT is really simple: it sets the fault_handler field of uh_state. This structure contains the address of a kernel function that will be called when a fault is detected by the hypervisor.

When uH calls this command, it won't do anything as the registers are all set to 0. But this command will also be called later by the kernel, as can been seen in the rkp_init function from init/main.c.

// from init/main.c

static void __init rkp_init(void)

{

uh_call(UH_APP_INIT, 0, uh_get_fault_handler(), kimage_voffset, 0, 0);

// ...

}

// from include/linux/uh_fault_handler.h

typedef struct uh_registers {

u64 regs[31];

u64 sp;

u64 pc;

u64 pstate;

} uh_registers_t;

typedef struct uh_handler_data{

esr_t esr_el2;

u64 elr_el2;

u64 hcr_el2;

u64 far_el2;

u64 hpfar_el2;

uh_registers_t regs;

} uh_handler_data_t;

typedef struct uh_handler_list{

u64 uh_handler;

uh_handler_data_t uh_handler_data[NR_CPUS];

} uh_handler_list_t;

// from init/uh_fault_handler.c

void uh_fault_handler(void)

{

unsigned int cpu;

uh_handler_data_t *uh_handler_data;

u32 exception_class;

unsigned long flags;

struct pt_regs regs;

spin_lock_irqsave(&uh_fault_lock, flags);

cpu = smp_processor_id();

uh_handler_data = &uh_handler_list.uh_handler_data[cpu];

exception_class = uh_handler_data->esr_el2.ec;

if (!exception_class_string[exception_class]

|| exception_class > esr_ec_brk_instruction_execution)

exception_class = esr_ec_unknown_reason;

pr_alert("=============uH fault handler logging=============\n");

pr_alert("%s",exception_class_string[exception_class]);

pr_alert("[System registers]\n", cpu);

pr_alert("ESR_EL2: %x\tHCR_EL2: %llx\tHPFAR_EL2: %llx\n",

uh_handler_data->esr_el2.bits,

uh_handler_data->hcr_el2, uh_handler_data->hpfar_el2);

pr_alert("FAR_EL2: %llx\tELR_EL2: %llx\n", uh_handler_data->far_el2,

uh_handler_data->elr_el2);

memset(®s, 0, sizeof(regs));

memcpy(®s, &uh_handler_data->regs, sizeof(uh_handler_data->regs));

do_mem_abort(uh_handler_data->far_el2, (u32)uh_handler_data->esr_el2.bits, ®s);

panic("%s",exception_class_string[exception_class]);

}

u64 uh_get_fault_handler(void)

{

uh_handler_list.uh_handler = (u64) & uh_fault_handler;

return (u64) & uh_handler_list;

}

They are 2 commands of APP_INIT that are used for initialization-related things (even though they don't have command ID #0). They are not called by the kernel, but by S-Boot before loading/executing the kernel.

int64_t dtb_update(...) {

// ...

dtb_find_entries(dtb, "memory", j_uh_add_dynamic_region);

sprintf(path, "/reserved-memory");

offset = dtb_get_path_offset(dtb, path);

if (offset < 0) {

dprintf("%s: fail to get path [%s]: %d\n", "dtb_update_reserved_memory", path, offset);

} else {

heap_base = 0;

heap_size = 0;

dtb_add_reserved_memory(dtb, offset, 0x87000000, 0x200000, "el2_code", "el2,uh");

uh_call(0xC300C000, 4, &heap_base, &heap_size, 0, 0);

dtb_add_reserved_memory(dtb, offset, heap_base, heap_size, "el2_earlymem", "el2,uh");

dtb_add_reserved_memory(dtb, offset, 0x80001000, 0x1000, "kaslr", "kernel-kaslr");

if (get_env_var(FORCE_UPLOAD) == 5)

rmem_size = 0x2400000;

else

rmem_size = 0x1700000;

dtb_add_reserved_memory(dtb, offset, 0xC9000000, rmem_size, "sboot", "sboot,rmem");

}

// ...

}

int64_t uh_add_dynamic_region(int64_t addr, int64_t size) {

uh_call(0xC300C000, 2, addr, size, 0, 0);

return 0;

}

void uh_call(...) {

asm("hvc #0");

}

S-Boot will call command #2 for each memory node in the DTB (the HVC arguments are the memory region address and size). It will then call command #4 (the arguments are two pointers to local variables of S-Boot).

int64_t init_cmd_add_dynamic_region(saved_regs_t* regs) {

// ...

if (uh_state.dynamic_heap_inited || !regs->x2 || !regs->x3)

return -1;

return memlist_add(&uh_state.dynamic_regions, regs->x2, regs->x3);

}

The command #2, that we named add_dynamic_region, is used to add a memory range to the dynamic_regions memlist, out of which will be carved the "dynamic heap" region of uH. S-Boot indicates to the hypervisor which physical memory regions it can access once DDR has been initialized.

int64_t init_cmd_initialize_dynamic_heap(saved_regs_t* regs) {

// ...

if (!regs->x2 || !regs->x3)

return -1;

PHYS_OFFSET = memlist_get_min_addr(&uh_state.dynamic_regions);

base = virt_to_phys_el1(regs->x2);

check_kernel_input(base);

size = virt_to_phys_el1(regs->x3);

check_kernel_input(size);

if (!base || !size) {

uh_log('L', "main.c", 188, "Wrong addr in dynamicheap : base: %p, size: %p", base, size);

return -1;

}

if (uh_state.dynamic_heap_inited)

return -1;

uh_state.dynamic_heap_inited = 1;

dynamic_heap_base = *base;

if (!regs->x4) {

memlist_merge_ranges(&uh_state.dynamic_regions);

memlist_dump(&uh_state.dynamic_regions);

some_size1 = memlist_get_some_size(&uh_state.dynamic_regions, 0x100000);

set_robuf_size(some_size1 + 0x600000);

some_size2 = memlist_get_some_size(&uh_state.dynamic_regions, 0x100000);

some_size3 = memlist_get_some_size(&uh_state.dynamic_regions, 0x200000);

dynamic_heap_size = (some_size1 + some_size2 + some_size3 + 0x7FFFFF) & 0xFFE00000;

} else {

dynamic_heap_size = *size;

}

if (!dynamic_heap_base) {

dynamic_heap_base = memlist_get_region_of_size(&uh_state.dynamic_regions, dynamic_heap_size, 0x200000);

} else {

if (memlist_remove(&uh_state.dynamic_regions, dynamic_heap_base, dynamic_heap_size)) {

uh_log('L', "main.c", 281, "[-] Dynamic heap address is not existed in memlist, base : %p", dynamic_heap_base);

return -1;

}

}

dynamic_heap_initialize(dynamic_heap_base, dynamic_heap_size);

uh_log('L', "main.c", 288, "[+] Dynamic heap initialized base: %lx, size: %lx", dynamic_heap_base, dynamic_heap_size);

*base = dynamic_heap_base;

*size = dynamic_heap_size;

mapped_start = dynamic_heap_base;

if ((s2_map(dynamic_heap_base, dynamic_heap_size_0, UNKN1 | WRITE | READ, &mapped_start) & 0x8000000000000000) != 0) {

uh_log('L', "main.c", 299, "s2_map returned false, start : %p, size : %p", mapped_start, dynamic_heap_size);

return -1;

}

sparsemap_init("physmap", &uh_state.phys_map, &uh_state.dynamic_regions, 0x20, 0);

sparsemap_for_all_entries(&uh_state.phys_map, protected_ranges_add);

sparsemap_init("ro_bitmap", &uh_state.ro_bitmap, &uh_state.dynamic_regions, 1, 0);

sparsemap_init("dbl_bitmap", &uh_state.dbl_bitmap, &uh_state.dynamic_regions, 1, 0);

memlist_init(&uh_state.page_allocator.list);

memlist_add(&uh_state.page_allocator.list, dynamic_heap_base, dynamic_heap_size);

sparsemap_init("robuf", &uh_state.page_allocator.map, &uh_state.page_allocator.list, 1, 0);

allocate_robuf();

regions_end_addr = memlist_get_max_addr(&uh_state.dynamic_regions);

if ((regions_end_addr >> 33) <= 4) {

s2_unmap(regions_end_addr, 0xA00000000 - regions_end_addr);

s1_unmap(regions_end_addr, 0xA00000000 - regions_end_addr);

}

return 0;

}

The command #4, that we named initialize_dynamic_heap, is used to finalize the list of memory regions and initialize the dynamic heap allocator. S-Boot calls it once all physical memory regions have been added using the previous command. This function does multiple things (some details are still unclear to us):

- it sets the

PHYS_OFFSET (physical address of kernel start);

- if the dynamic heap base address was given as the first argument, it will use it, otherwise it will try to find a region of memory that is big enough for it;

- it removes the chosen region from the

dynamic_regions memlist;

- it calls

dynamic_heap_initialize which like for the static heap, saves the values in global variables and initializes the list of heap chunks, similarly to the static heap allocator;

- it adds the dynamic heap region to the stage 2 translation tables;

- it initializes 3 sparsemaps

physmap, ro_bitmap and dbl_bitmap with the dynamic_regions memlist;

- it adds all the bitmap buffers of the

physmap sparsemap to the protected_ranges memlist;

- it initializes a new memlist called

robuf_regions and adds the dynamic heap region to it;

- it initializes a new sparsemap called

robuf with the robuf_regions memlist;

- it calls

allocate_robuf which we are going to detail below;

- finally it unmaps from the stage 1 and 2 the memory located between the end address of the list range of

regions and 0xA00000000 (we don't know why it does that).

int64_t allocate_robuf() {

// ...

if (!uh_state.dynamic_heap_inited) {

uh_log('L', "page_allocator.c", 84, "Dynamic heap needs to be initialized");

return -1;

}

robuf_size = uh_state.page_allocator.robuf_size & 0xFFFFF000;

robuf_base = dynamic_heap_alloc(uh_state.page_allocator.robuf_size & 0xFFFFF000, 0x1000);

if (!robuf_base)

dynamic_heap_alloc_last_chunk(&robuf_base, &robuf_size);

if (!robuf_base) {

uh_log('L', "page_allocator.c", 96, "Robuffer Alloc Fail");

return -1;

}

if (robuf_size) {

offset = 0;

do {

zero_data_cache_page(robuf_base + offset);

offset += 0x1000;

} while (offset < robuf_size);

}

return page_allocator_init(&uh_state.page_allocator, robuf_base, robuf_size);

}

allocate_robuf tries to allocate a region of robuf_size from the dynamic heap allocator that was initialized moments ago, and if that fails, it grabs the last contiguous chunk of memory available in the allocator. It then calls page_allocator_init with this memory region as argument. page_allocator_init initializes the sparsemap and everything that the page allocator will use. The page allocator/the "robuf" region, is what will be used by RKP for handing read-only pages to the kernel (for the data protection feature for example).

APP_RKP

The command handlers registered for APP_RKP are:

Command ID

Command Handler

Maximum Calls

0x00

rkp_cmd_init0

0x01

rkp_cmd_start1

0x02

rkp_cmd_deferred_start1

0x03

rkp_cmd_write_pgt1-

0x04

rkp_cmd_write_pgt2-

0x05

rkp_cmd_write_pgt3-

0x06

rkp_cmd_emult_ttbr0-

0x07

rkp_cmd_emult_ttbr1-

0x08

rkp_cmd_emult_doresume-

0x09

rkp_cmd_free_pgd-

0x0A

rkp_cmd_new_pgd-

0x0B

rkp_cmd_kaslr_mem0

0x0D

rkp_cmd_jopp_init1

0x0E

rkp_cmd_ropp_init1

0x0F

rkp_cmd_ropp_save0

0x10

rkp_cmd_ropp_reload-

0x11

rkp_cmd_rkp_robuffer_alloc-

0x12

rkp_cmd_rkp_robuffer_free-

0x13

rkp_cmd_get_ro_bitmap1

0x14

rkp_cmd_get_dbl_bitmap1

0x15

rkp_cmd_get_rkp_get_buffer_bitmap1

0x17

rkp_cmd_id_0x17-

0x18

rkp_cmd_set_sctlr_el1-

0x19

rkp_cmd_set_tcr_el1-

0x1A

rkp_cmd_set_contextidr_el1-

0x1B

rkp_cmd_id_0x1B-

0x20

rkp_cmd_dynamic_load-

0x40

rkp_cmd_cred_init1

0x41

rkp_cmd_assign_ns_size1

0x42

rkp_cmd_assign_cred_size1

0x43

rkp_cmd_pgd_assign-

0x44

rkp_cmd_cred_set_fp-

0x45

rkp_cmd_cred_set_security-

0x46

rkp_cmd_assign_creds-

0x48

rkp_cmd_ro_free_pages-

0x4A

rkp_cmd_prot_dble_map-

0x4B

rkp_cmd_mark_ppt-

0x4E

rkp_cmd_set_pages_ro_tsec_jar-

0x4F

rkp_cmd_set_pages_ro_vfsmnt_jar-

0x50

rkp_cmd_set_pages_ro_cred_jar-

0x51

rkp_cmd_id_0x511

0x52

rkp_cmd_init_ns-

0x53

rkp_cmd_ns_set_root_sb-

0x54

rkp_cmd_ns_set_flags-

0x55

rkp_cmd_ns_set_data-

0x56

rkp_cmd_ns_set_sys_vfsmnt5

0x57

rkp_cmd_id_0x57-

0x60

rkp_cmd_selinux_initialized-

0x81

rkp_cmd_test_get_par0

0x82

rkp_cmd_test_get_wxn0

0x83

rkp_cmd_test_ro_range0

0x84

rkp_cmd_test_get_va_xn0

0x85

rkp_check_vmm_unmapped0

0x86

rkp_cmd_test_ro0

0x87

rkp_cmd_id_0x870

0x88

rkp_cmd_check_splintering_point0

0x89

rkp_cmd_id_0x890

Let's take a look at command #0 called during startup by uH.

int64_t rkp_cmd_init() {

rkp_panic_on_violation = 1;

rkp_init_cmd_counts();

cs_init(&rkp_start_lock);

return 0;

}

The command #0 handler of APP_RKP is also really simple. It set the maximal number of times a command can be called (enforced by the "checker" function) by calling rkp_init_cmd_counts and initializes the critical section that will be used by the "start" and "deferred start" commands (more on that later).

Exception Handling

An important part of a hypervisor is its exception handling code. These handling functions are going to be called on invalid memory accesses by the kernel, when the kernel executes an HVC instruction, etc. They can be found by looking at the vector table specified in the VBAR_EL2 register. We have seen in vmm_init that the vector table is at vmm_vector_table. From the ARMv8 specifications, it has the following structure:

Address

Exception Type

Description

+0x000

Synchronous

Current EL with SP0

+0x080

IRQ/vIRQ

+0x100

FIQ/vFIQ

+0x180

SError/vSError

+0x200

Synchronous

Current EL with SPx

+0x280

IRQ/vIRQ

+0x300

FIQ/vFIQ

+0x380

SError/vSError

+0x400

Synchronous

Lower EL using AArch64

+0x480

IRQ/vIRQ

+0x500

FIQ/vFIQ

+0x580

SError/vSError

+0x600

Synchronous

Lower EL using AArch32

+0x680

IRQ/vIRQ

+0x700

FIQ/vFIQ

+0x780

SError/vSError

Our device has a 64-bit kernel executing at EL1, so the commands dispatch will be in the exception handler at vmm_vector_table+0x400, but all the handlers end up calling the same function anyway:

void exception_handler(...) {

// ...

// Save registers x0 to x30, sp_el1, elr_el2, spsr_el2

// ...

vmm_dispatch(<level>, <type>, ®s);

asm("clrex");

asm("eret");

}

vmm_dispatch is given as arguments the level and type of the exception that has been taken.

int64_t vmm_dispatch(int64_t level, int64_t type, saved_regs_t* regs) {

// ...

if (has_panicked)

vmm_panic(level, type, regs, "panic on another core");

switch (type) {

case 0x0:

if (vmm_synchronous_handler(level, type, regs))

vmm_panic(level, type, regs, "syncronous handler failed");

break;

case 0x80:

uh_log('D', "vmm.c", 1132, "RKP_e3b85960");

break;

case 0x100:

uh_log('D', "vmm.c", 1135, "RKP_6d732e0a");

break;

case 0x180:

uh_log('D', "vmm.c", 1149, "RKP_3c71de0a");

break;

default:

return 0;

}

return 0;

}

vmm_dispatch, in the case of synchronous exception, will call vmm_synchronous_handler.

int64_t vmm_synchronous_handler(int64_t level, int64_t type, saved_regs_t* regs) {

// ...

esr_el2 = get_esr_el2();

switch (esr_el2 >> 26) {

case 0x12: /* HVC instruction execution in AArch32 state */

case 0x16: /* HVC instruction execution in AArch64 state */

if ((regs->x0 & 0xFFFFF000) == 0xC300C000) {

cmd_id = regs->x1;

app_id = regs->x0;

cpu_num = get_current_cpu();

if (cpu_num <= 7)

uh_state.injections[cpu_num] = 0;

uh_handle_command(app_id, cmd_id, regs);

}

return 0;

case 0x18: /* Trapped MSR, MRS or Sys. ins. execution in AArch64 state */

if ((esr_el2 & 1) == 0 && !other_msr_mrs_system(®s->x0, esr_el2_1 & 0x1FFFFFF))

return 0;

vmm_panic(level, type, regs, "other_msr_mrs_system failure");

return 0;

case 0x20: /* Instruction Abort from a lower EL */

cs_enter(&s2_lock);

el1_va_to_ipa(get_elr_el2(), &ipa);

get_s2_1gb_page(ipa, &fld);

print_s2_fld(fld);

if ((fld & 3) == 3) {

get_s2_2mb_page(ipa, &sld);

print_s2_sld(sld);

if ((sld & 3) == 3) {

get_s2_4kb_page(ipa, &tld);

print_s2_tld(tld);

}

}

cs_exit(&s2_lock);

if (should_skip_prefetch_abort() == 1)

return 0;

if (!esr_ec_prefetch_abort_from_a_lower_exception_level("-snip-")) {

print_vmm_registers(regs);

return 0;

}

vmm_panic(level, type, regs, "esr_ec_prefetch_abort_from_a_lower_exception_level");

return 0;

case 0x21: /* Instruction Abort taken without a change in EL */

uh_log('L', "vmm.c", 920, "esr abort iss: 0x%x", esr_el2 & 0x1FFFFFF);

vmm_panic(level, type, regs, "esr_ec_prefetch_abort_taken_without_a_change_in_exception_level");

case 0x24: /* Data Abort from a lower EL */

if (!rkp_fault(regs))

return 0;

if ((esr_el2 & 0x3F) == 7) // Translation fault, level 3

{

va = rkp_get_va(get_hpfar_el2() << 8);

cs_enter(&s2_lock);

res = el1_va_to_pa(va, &ipa);

if (!res) {

uh_log('L', "vmm.c", 994, "Skipped data abort va: %p, ipa: %p", va, ipa);

cs_exit(&s2_lock);

return 0;

}

cs_exit(&s2_lock);

}

if ((esr_el2 & 0x7C) == 76) // Permission fault, any level

{

va = rkp_get_va(get_hpfar_el2() << 8);

at_s12e1w(va);

if ((get_par_el1() & 1) == 0) {

print_el2_state();

invalidate_entire_s1_s2_el1_tlb();

return 0;

}

}

el1_va_to_ipa(get_elr_el2(), &ipa);

get_s2_1gb_page(ipa, &fld);

print_s2_fld(fld);

if ((fld & 3) == 3) {

get_s2_2mb_page(ipa, &sld);

print_s2_sld(sld);

if ((sld & 3) == 3) {

get_s2_4kb_page(ipa, &tld);

print_s2_tld(tld);

}

}

if (esr_ec_prefetch_abort_from_a_lower_exception_level("-snip-"))

vmm_panic(level, type, regs, "esr_ec_data_abort_from_a_lower_exception_level");

else

print_vmm_registers(regs);

return 0;

case 0x25: /* Data Abort taken without a change in EL */

vmm_panic(level, type, regs, "esr_ec_data_abort_taken_without_a_change_in_exception_level");

return 0;

default:

return -1;

}

}

vmm_synchronous_handler first get the exception class by reading the ESR_EL2 register.

- if it is an HVC instruction executed in an AArch32 or AArch64 state, it calls the

uh_handle_command function with the app_id in X0 and cmd_id in X1;

- if it is a trapped system register access in AArch64 state, and if it was a write, then it calls the

other_msr_mrs_system function with the register that was being written to;

other_msr_mrs_system gets the value that was being written from the saved registers;- depending on which register was being written to, it either calls

uh_panic if the register is not allowed to be written to, or checks if the new value is valid (if specific bits have a fixed value);

- it updates the

ELR_EL2 register to make it point to the next instruction.

- if it is an instruction abort from a lower exception level;

- it calls

should_skip_prefetch_abort;

- if IFSC==0b000111 (Translation fault, level 3) && S1PTW==1 (Fault on the stage 2 translation) && EA==0 (not an External Abort) && FnV=0b0 (FAR is valid) && SET=0b00 (Recoverable state)

- and if the number of prefect abort skipped is less than 9;

- then

should_skip_prefetch_abort returns 1, otherwise it return 0.

- if it isn't skipped, then it calls

esr_ec_prefetch_abort_from_a_lower_exception_level;

- the function checks if the fault address is 0;

- if it is, then it injects the fault back into EL1;

- it also logs the CPU number into the

injections array of uh_state;

- if the address was not 0, it panics.

- it is a data abort from a lower exception level;

- it calls

rkp_fault to detect RKP faults;

- the faulting instruction must be in kernel text;

- the faulting instruction must be

str x2, [x1];

- x1 must point to a page table entry;

- if it is a level 1 PTE, call

rkp_l1pgt_write;

- if it is a level 2 PTE, call

rkp_l2pgt_write;

- if it is a level 3 PTE, call

rkp_l3pgt_write.

- advance the PC to the next instruction and return.

- if not an RKP fault, it check the data fault status code;

- if DFSC==0b000111 (Translation fault, level 3);

- but it can translate s12e1(r|w) the faulting address, don't panic.

- if DFSC==0b0011xx (Permission fault, any level);

- but it can translate s12e1w the faulting address, invalidate TLBs.

- otherwise, call

esr_ec_prefetch_abort_from_a_lower_exception_level;

- does the same as above, inject into EL1 is 0, panic otherwise.

- if it is an instruction abort or a data abort from EL2, it panics.

crit_sec_t* vmm_panic(int64_t level, int64_t type, saved_regs_t* regs, char* message) {

// ...

uh_log('L', "vmm.c", 1171, ">>vmm_panic<<");

cs_enter(&panic_cs);

uh_log('L', "vmm.c", 1175, "message: %s", message);

switch (level) {

case 0x0:

uh_log('L', "vmm.c", 1179, "level: VMM_EXCEPTION_LEVEL_TAKEN_FROM_CURRENT_WITH_SP_EL0");

break;

case 0x200:

uh_log('L', "vmm.c", 1182, "level: VMM_EXCEPTION_LEVEL_TAKEN_FROM_CURRENT_WITH_SP_ELX");

break;

case 0x400:

uh_log('L', "vmm.c", 1185, "level: VMM_EXCEPTION_LEVEL_TAKEN_FROM_LOWER_USING_AARCH64");

break;

case 0x600:

uh_log('L', "vmm.c", 1188, "level: VMM_EXCEPTION_LEVEL_TAKEN_FROM_LOWER_USING_AARCH32");

break;

default:

uh_log('L', "vmm.c", 1191, "level: VMM_UNKNOWN\n");

break;

}

switch (type) {

case 0x0:

uh_log('L', "vmm.c", 1197, "type: VMM_EXCEPTION_TYPE_SYNCHRONOUS");

break;

case 0x80:

uh_log('L', "vmm.c", 1200, "type: VMM_EXCEPTION_TYPE_IRQ_OR_VIRQ");

break;

case 0x100:

uh_log('L', "vmm.c", 1203, "type: VMM_SYSCALL\n");

break;

case 0x180:

uh_log('L', "vmm.c", 1206, "type: VMM_EXCEPTION_TYPE_SERROR_OR_VSERROR");

break;

default:

uh_log('L', "vmm.c", 1209, "type: VMM_UNKNOWN\n");

break;

}

print_vmm_registers(regs);

if ((get_sctlr_el1() & 1) == 0 || type != 0 || (level == 0 || level == 0x200)) {

has_panicked = 1;

cs_exit(&panic_cs);

if (!strcmp(message, "panic on another core"))

exynos_reset(0x8800);

uh_panic();

}

uh_panic_el1(uh_state.fault_handler, regs);

return cs_exit(&panic_cs);

}

vmm_panic logs the panic message, exception level and type, and if either

- the MMU is disabled;

- the exception is not synchronous;

- the exception is taken from EL2;

then it calls uh_panic, otherwise it calls uh_panic_el1.

void uh_panic() {

uh_log('L', "main.c", 482, "uh panic!");

print_state_and_reset();

}

void print_state_and_reset() {

uh_log('L', "panic.c", 29, "count state - page_ro: %lx, page_free: %lx, s2_breakdown: %lx", page_ro, page_free,

s2_breakdown);

print_el2_state();

print_el1_state();

print_stack_contents();

bigdata_store_data();

has_panicked = 1;

exynos_reset(0x8800);

}

uh_panic logs the EL1 and EL2 system registers values, hypervisor and kernel stacks contents, copies a textual version of those into the "bigdata" region, and then reboots the device.

int64_t uh_panic_el1(uh_handler_list_t* fault_handler, saved_regs_t* regs) {

// ...

uh_log('L', "vmm.c", 111, ">>uh_panic_el1<<");

if (!fault_handler) {

uh_log('L', "vmm.c", 113, "uH handler did not registered");

uh_panic();

}

print_el2_state();

print_el1_state();

print_stack_contents();

cpu_num = get_current_cpu();

if (cpu_num <= 7) {

something = cpu_num - 0x21530000;

if (uh_state.injections[cpu_num] == something)

uh_log('D', "vmm.c", 99, "Injection locked");

uh_state.injections[cpu_num] = something;

}

handler_data = &fault_handler->uh_handler_data[cpu_num];

handler_data->esr_el2 = get_esr_el2();

handler_data->elr_el2 = get_elr_el2();

handler_data->hcr_el2 = get_hcr_el2();

handler_data->far_el2 = get_far_el2();

handler_data->hpfar_el2 = get_hpfar_el2() << 8;

if (regs)

memcpy(fault_handler->uh_handler_data[cpu_num].regs.regs, regs, 272);

set_elr_el2(fault_handler->uh_handler);

return 0;

}

uh_panic_el1 fills the structure that was specified in the command #0 of APP_INIT that we have just seen. It also sets ELR_EL2 to the handler function so that it will be called upon executing the ERET instruction.

Digging Into RKP

Startup

RKP startup is performed in two stages using two different commands:

- command #1 (start): called by the kernel in

start_kernel, right after mm_init;

- command #2 (deferred start): called by the kernel in

kernel_init, right before starting init.

RKP Start

On the kernel side, this command is called in rkp_init, still in init/main.c.

rkp_init_t rkp_init_data __rkp_ro = {

.magic = RKP_INIT_MAGIC,

.vmalloc_start = VMALLOC_START,

.no_fimc_verify = 0,

.fimc_phys_addr = 0,

._text = (u64)_text,

._etext = (u64)_etext,

._srodata = (u64)__start_rodata,

._erodata = (u64)__end_rodata,

.large_memory = 0,

};

static void __init rkp_init(void)

{

// ...

rkp_init_data.vmalloc_end = (u64)high_memory;

rkp_init_data.init_mm_pgd = (u64)__pa(swapper_pg_dir);

rkp_init_data.id_map_pgd = (u64)__pa(idmap_pg_dir);

rkp_init_data.tramp_pgd = (u64)__pa(tramp_pg_dir);

#ifdef CONFIG_UH_RKP_FIMC_CHECK

rkp_init_data.no_fimc_verify = 1;

#endif

rkp_init_data.tramp_valias = (u64)TRAMP_VALIAS;

rkp_init_data.zero_pg_addr = (u64)__pa(empty_zero_page);

// ...

uh_call(UH_APP_RKP, RKP_START, (u64)&rkp_init_data, (u64)kimage_voffset, 0, 0);

}

asmlinkage __visible void __init start_kernel(void)

{

// ...

rkp_init();

// ...

}

On the hypervisor side, the command handler is as follows:

int64_t rkp_cmd_start(saved_regs_t* regs) {

// ...

cs_enter(&rkp_start_lock);

if (rkp_inited) {

cs_exit(&rkp_start_lock);

uh_log('L', "rkp.c", 133, "RKP is already started");

return -1;

}

res = rkp_start(regs);

cs_exit(&rkp_start_lock);

return res;

}

rkp_cmd_start calls rkp_start which does the real work.

int64_t rkp_start(saved_regs_t* regs) {

// ...

KIMAGE_VOFFSET = regs->x3;

rkp_init_data = rkp_get_pa(regs->x2);

if (rkp_init_data->magic - 0x5AFE0001 >= 2) {

uh_log('L', "rkp_init.c", 85, "RKP INIT-Bad Magic(%d), %p", regs->x2, rkp_init_data);

return -1;

}

if (rkp_init_data->magic == 0x5AFE0002) {

rkp_init_cmd_counts_test();

rkp_test = 1;

}

INIT_MM_PGD = rkp_init_data->init_mm_pgd;

ID_MAP_PGD = rkp_init_data->id_map_pgd;

ZERO_PG_ADDR = rkp_init_data->zero_pg_addr;

TRAMP_PGD = rkp_init_data->tramp_pgd;

TRAMP_VALIAS = rkp_init_data->tramp_valias;

VMALLOC_START = rkp_init_data->vmalloc_start;

VMALLOC_END = rkp_init_data->vmalloc_end;

TEXT = rkp_init_data->_text;

ETEXT = rkp_init_data->_etext;

TEXT_PA = rkp_get_pa(TEXT);

ETEXT_PA = rkp_get_pa(ETEXT);

SRODATA = rkp_init_data->_srodata;

ERODATA = rkp_init_data->_erodata;

TRAMP_PGD_PAGE = TRAMP_PGD & 0xFFFFFFFFF000;

INIT_MM_PGD_PAGE = INIT_MM_PGD & 0xFFFFFFFFF000;

LARGE_MEMORY = rkp_init_data->large_memory;

page_ro = 0;

page_free = 0;

s2_breakdown = 0;

pmd_allocated_by_rkp = 0;

NO_FIMC_VERIFY = rkp_init_data->no_fimc_verify;

if (rkp_bitmap_init() < 0) {

uh_log('L', "rkp_init.c", 150, "Failed to init bitmap");

return -1;

}

memlist_init(&executable_regions);

memlist_set_field_14(&executable_regions);

memlist_add(&executable_regions, TEXT, ETEXT - TEXT);

if (TRAMP_VALIAS)

memlist_add(&executable_regions, TRAMP_VALIAS, 0x1000);

memlist_init(&dynamic_load_regions);

memlist_set_field_14(&dynamic_load_regions);

put_last_dynamic_heap_chunk_in_static_heap();

if (rkp_paging_init() < 0) {

uh_log('L', "rkp_init.c", 169, "rkp_pging_init fails");

return -1;

}

rkp_inited = 1;

if (rkp_l1pgt_process_table(get_ttbr0_el1() & 0xFFFFFFFFF000, 0, 1) < 0) {

uh_log('L', "rkp_init.c", 179, "processing l1pgt fails");

return -1;

}

uh_log('L', "rkp_init.c", 183, "[*] HCR_EL2: %lx, SCTLR_EL2: %lx", get_hcr_el2(), get_sctlr_el2());

uh_log('L', "rkp_init.c", 184, "[*] VTTBR_EL2: %lx, TTBR0_EL2: %lx", get_vttbr_el2(), get_ttbr0_el2());

uh_log('L', "rkp_init.c", 185, "[*] MAIR_EL1: %lx, MAIR_EL2: %lx", get_mair_el1(), get_mair_el2());

uh_log('L', "rkp_init.c", 186, "RKP Activated");

return 0;

}

Let's breakdown this function:

- it saves the second argument into the global variable

KIMAGE_VOFFSET;

- like most command handlers that we will see, it converts its first argument

rkp_init_data from a virtual address to a physical address. It does so by calling the rkp_get_pa function;

- it then checks its

magic field. If it is the test mode magic, then it calls rkp_init_cmd_counts_test which allows test commands 0x81-0x88 to be called an unlimited amount of times;

- it saves the various fields of

rkp_init_data into global variables;

- it initializes a new memlist called

executable_regions and adds the text section of the kernel to it, and does the same with the TRAMP_VALIAS page if provided;

- it initializes a new memlist called

dynamic_load_regions which is used for the "dynamic executable loading" feature of RKP (more on that at the end of the blog post);

- it calls

put_last_dynamic_chunk_in_heap (the end result on our device is that the static heap acquires all the unused dynamic memory and the dynamic heap doesn't have any memory left);

- it calls

rkp_paging_init and rkp_l1pgt_process_table that will we detail below;

- it logs some EL2 system register values and returns.

int64_t rkp_paging_init() {

// ...

if (!TEXT || (TEXT & 0xFFF) != 0) {

uh_log('L', "rkp_paging.c", 637, "kernel text start is not aligned, stext : %p", TEXT);

return -1;

}

if (!ETEXT || (ETEXT & 0xFFF) != 0) {

uh_log('L', "rkp_paging.c", 642, "kernel text end is not aligned, etext : %p", ETEXT);

return -1;

}

if (TEXT_PA <= get_base() && ETEXT_PA > get_base())

return -1;

if (s2_unmap(0x87000000, 0x200000))

return -1;

if (rkp_phys_map_set_region(TEXT_PA, ETEXT - TEXT, TEXT) < 0) {

uh_log('L', "rkp_paging.c", 435, "physmap set failed for kernel text");

return -1;

}

if (s1_map(TEXT_PA, ETEXT - TEXT, UNKN1 | READ)) {

uh_log('L', "rkp_paging.c", 447, "Failed to make VMM S1 range RO");

return -1;

}

if (INIT_MM_PGD >= TEXT_PA && INIT_MM_PGD < ETEXT_PA && s1_map(INIT_MM_PGD, 0x1000, UNKN1 | WRITE | READ)) {

uh_log('L', "rkp_paging.c", 454, "failed to make swapper_pg_dir RW");

return -1;

}

rkp_phys_map_lock(ZERO_PG_ADDR);

if (rkp_s2_page_change_permission(ZERO_PG_ADDR, 0, 1, 1) < 0) {

uh_log('L', "rkp_paging.c", 462, "Failed to make executable for empty_zero_page");

return -1;

}

rkp_phys_map_unlock(ZERO_PG_ADDR);

if (rkp_set_kernel_rox(0))

return -1;

if (rkp_s2_range_change_permission(0x87100000, 0x87140000, 0x80, 1, 1) < 0) {

uh_log('L', "rkp_paging.c", 667, "Failed to make UH_LOG region RO");

return -1;

}

if (!uh_state.dynamic_heap_inited)

return 0;

if (rkp_s2_range_change_permission(uh_state.dynamic_heap_base,

uh_state.dynamic_heap_base + uh_state.dynamic_heap_size, 0x80, 1, 1) < 0) {

uh_log('L', "rkp_paging.c", 685, "Failed to make dynamic_heap region RO");

return -1;

}

return 0;

}

Let's breakdown rkp_paging_init as well:

- it does some sanity checks on kernel text region;

- it marks the kernel text region as

TEXT (name is our own) in the phys_map;

- it maps the kernel text region as RO in EL2 stage 1;

- it maps

swapper_pg_dir as RW in EL2 stage 1;

- it makes the

empty_zero_page ROX in EL1 stage 2;

- it calls

rkp_set_kernel_rox that we will detail below;

- it makes the log region ROX in EL1 stage 2;

- it also makes the dynamic heap region as ROX in EL1 stage 2.

int64_t rkp_set_kernel_rox(int64_t access) {

// ...

erodata_pa = rkp_get_pa(ERODATA);

if (rkp_s2_range_change_permission(TEXT_PA, erodata_pa, access, 1, 1) < 0) {

uh_log('L', "rkp_paging.c", 392, "Failed to make Kernel range ROX");

return -1;

}

if (access)

return 0;

if (((erodata_pa | ETEXT_PA) & 0xFFF) != 0) {

uh_log('L', "rkp_paging.c", 158, "start or end addr is not aligned, %p - %p", ETEXT_PA, erodata_pa);

return 0;

}

if (ETEXT_PA > erodata_pa) {

uh_log('L', "rkp_paging.c", 163, "start addr is bigger than end addr %p, %p", ETEXT_PA, erodata_pa);

return 0;

}

paddr = ETEXT_PA;

while (sparsemap_set_value_addr(&uh_state.ro_bitmap, addr, 1) >= 0) {

paddr += 0x1000;

if (paddr >= erodata_pa)

return 0;

uh_log('L', "rkp_paging.c", 171, "set_pgt_bitmap fail, %p", paddr);

}

return 0;

}

rkp_set_kernel_rox makes the range [kernel text start; rodata end] RWX (yes, RWX as the access argument is 0, but this function will be called again later with 0x80, making it RO) in the EL1 stage 2, then it updates ro_bitmap to mark the range [kernel text end; rodata end] as RO in the ro_bitmap sparsemap.

After rkp_paging_init, rkp_start calls rkp_l1pgt_process_table to process the page tables (mainly about making the 3 levels of tables read-only). It calls this function on the value of TTBR0_EL1 register.

RKP Deferred Start

On the kernel side, this command is called in rkp_deferred_init in include/linux/rkp.h.

// from include/linux/rkp.h

static inline void rkp_deferred_init(void){

uh_call(UH_APP_RKP, RKP_DEFERRED_START, 0, 0, 0, 0);

}

// from init/main.c

static int __ref kernel_init(void *unused)

{

// ...

rkp_deferred_init();

// ...

}

On the hypervisor side, the command handler is as follows:

int64_t rkp_cmd_deferred_start() {

return rkp_deferred_start();

}

int64_t rkp_deferred_start() {

uh_log('L', "rkp_init.c", 193, "DEFERRED INIT START");

if (rkp_set_kernel_rox(0x80))

return -1;

if (rkp_l1pgt_process_table(INIT_MM_PGD, 0x1FFFFFF, 1) < 0) {

uh_log('L', "rkp_init.c", 198, "Failed to make l1pgt processing");

return -1;

}

if (TRAMP_PGD && rkp_l1pgt_process_table(TRAMP_PGD, 0x1FFFFFF, 1) < 0) {

uh_log('L', "rkp_init.c", 204, "Failed to make l1pgt processing");

return -1;

}

rkp_deferred_inited = 1;

uh_log('L', "rkp_init.c", 217, "DEFERRED INIT IS DONE\n");

memory_fini();

return 0;

}

rkp_cmd_deferred_start and rkp_deferred_start do the following:

- it calls

rkp_set_kernel_rox again (the first time was in the normal start) but this time with 0x80 (read-only) as an argument, so the kernel text + rodata gets marked as RO in stage 2;

- it calls

rkp_l1pgt_process_table on swapper_pg_dir;

- it calls

rkp_l1pgt_process_table on tramp_pg_dir (if set);

- finally it calls

memory_fini.

RKP Bitmaps

There are 3 more RKP commands there called by the kernel during startup.

Two of them are still in rkp_init in init/main.c:

// from init/main.c

sparse_bitmap_for_kernel_t* rkp_s_bitmap_ro __rkp_ro = 0;

sparse_bitmap_for_kernel_t* rkp_s_bitmap_dbl __rkp_ro = 0;

static void __init rkp_init(void)

{

// ...

rkp_s_bitmap_ro = (sparse_bitmap_for_kernel_t *)

uh_call(UH_APP_RKP, RKP_GET_RO_BITMAP, 0, 0, 0, 0);

rkp_s_bitmap_dbl = (sparse_bitmap_for_kernel_t *)

uh_call(UH_APP_RKP, RKP_GET_DBL_BITMAP, 0, 0, 0, 0);

// ...

}

// from include/linux/rkp.h

typedef struct sparse_bitmap_for_kernel {

u64 start_addr;

u64 end_addr;

u64 maxn;

char **map;

} sparse_bitmap_for_kernel_t;

static inline u8 rkp_is_pg_protected(u64 va){

return rkp_check_bitmap(__pa(va), rkp_s_bitmap_ro);

}

static inline u8 rkp_is_pg_dbl_mapped(u64 pa){

return rkp_check_bitmap(pa, rkp_s_bitmap_dbl);

}

Let's take a look at the command handler for RKP_GET_RO_BITMAP.

int64_t rkp_cmd_get_ro_bitmap(saved_regs_t* regs) {

// ...

if (rkp_deferred_inited)

return -1;

bitmap = dynamic_heap_alloc(0x20, 0);

if (!bitmap) {

uh_log('L', "rkp.c", 302, "Fail alloc robitmap for kernel");

return -1;

}

memset(bitmap, 0, sizeof(sparse_bitmap_for_kernel_t));

res = sparsemap_bitmap_kernel(&uh_state.ro_bitmap, bitmap);

if (res) {

uh_log('L', "rkp.c", 309, "Fail sparse_map_bitmap_kernel");

return res;

}

regs->x0 = rkp_get_va(bitmap);

if (regs->x2)

*virt_to_phys_el1(regs->x2) = regs->x0;

uh_log('L', "rkp.c", 322, "robitmap:%p", bitmap);

return 0;

}

rkp_cmd_get_ro_bitmap allocates a sparse_bitmap_for_kernel_t structure from the dynamic heap, zeroes it, and passes it to sparsemap_bitmap_kernel that will fill it with the information in ro_bitmap. Then it puts its VA into X0, and if a pointer was provided in X2, it will also put it there (using virt_to_phys_el1).

int64_t sparsemap_bitmap_kernel(sparsemap_t* map, sparse_bitmap_for_kernel_t* kernel_bitmap) {

// ...

if (!map || !kernel_bitmap)

return -1;

kernel_bitmap->start_addr = map->start_addr;

kernel_bitmap->end_addr = map->end_addr;

kernel_bitmap->maxn = map->count;

bitmaps = dynamic_heap_alloc(8 * map->count, 0);

if (!bitmaps) {

uh_log('L', "sparsemap.c", 202, "kernel_bitmap does not allocated : %lu", map->count);

return -1;

}

if (map->private) {

uh_log('L', "sparsemap.c", 206, "EL1 doesn't support to get private sparsemap");

return -1;

}

memset(bitmaps, 0, 8 * map->count);

kernel_bitmap->map = (bitmaps - PHYS_OFFSET) | 0xFFFFFFC000000000;

index = 0;

do {

bitmap = map->entries[index].bitmap;

if (bitmap)

bitmaps[index] = (bitmap - PHYS_OFFSET) | 0xFFFFFFC000000000;

++index;

} while (index < kernel_bitmap->maxn);

return 0;

}

sparsemap_bitmap_kernel takes a sparsemap and will convert all the bitmaps' physical addresses into virtual addresses before copying them into a sparse_bitmap_for_kernel_t structure.

rkp_cmd_get_dbl_bitmap is very similar to rkp_cmd_get_ro_bitmap, but of course instead of sending the bitmaps of the ro_bitmap, it sends those of dbl_bitmap.

The third command, rkp_cmd_get_rkp_get_buffer_bitmap, is also used to retrieve a sparsemap: page_allocator.map. It is called by the kernel in rkp_robuffer_init from init/main.c.

sparse_bitmap_for_kernel_t* rkp_s_bitmap_buffer __rkp_ro = 0;

static void __init rkp_robuffer_init(void)

{

rkp_s_bitmap_buffer = (sparse_bitmap_for_kernel_t *)

uh_call(UH_APP_RKP, RKP_GET_RKP_GET_BUFFER_BITMAP, 0, 0, 0, 0);

}

asmlinkage __visible void __init start_kernel(void)

{

// ...

rkp_robuffer_init();

// ...

rkp_init();

// ...

}

// from include/linux/rkp.h

static inline unsigned int is_rkp_ro_page(u64 va){

return rkp_check_bitmap(__pa(va), rkp_s_bitmap_buffer);

}

To summarize, these bitmaps are used by the kernel to check if some data is protected by RKP (allocated on a read-only page), so that if that is the case, the kernel will need to call one of the RKP commands to modify it.

Page Tables Processing

As a quick reminder, here is the Linux memory layout on Android (4KB pages + 3 levels):

Start End Size Use

-----------------------------------------------------------------------

0000000000000000 0000007fffffffff 512GB user

ffffff8000000000 ffffffffffffffff 512GB kernel

And here is the corresponding translation table lookup:

+--------+--------+--------+--------+--------+--------+--------+--------+

|63 56|55 48|47 40|39 32|31 24|23 16|15 8|7 0|

+--------+--------+--------+--------+--------+--------+--------+--------+

| | | | | |

| | | | | v

| | | | | [11:0] in-page offset

| | | | +-> [20:12] L3 index (PTE)

| | | +-----------> [29:21] L2 index (PMD)

| | +---------------------> [38:30] L1 index (PUD)

| +-------------------------------> [47:39] L0 index (PGD)

+-------------------------------------------------> [63] TTBR0/1

So keep in mind for this section that we have PGD = PUD = VA[38:30].

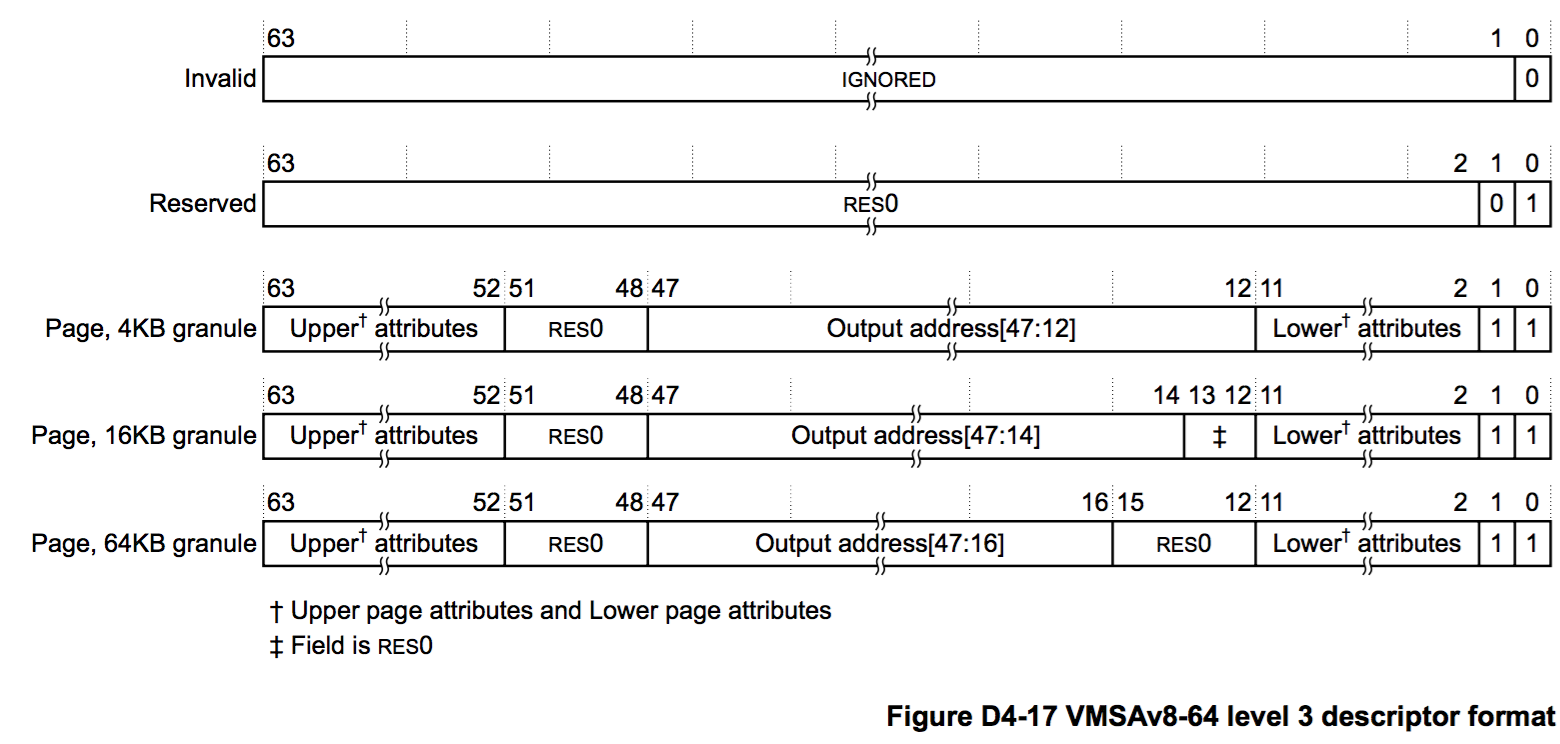

Here are the level 0, level 1 and level 2 descriptor formats:

And here is the level 3 descriptor format:

First Level

Processing of the first level tables in done by the rkp_l1pgt_process_table function.

int64_t rkp_l1pgt_process_table(int64_t pgd, uint32_t high_bits, uint32_t is_alloc) {

// ...

if (high_bits == 0x1FFFFFF) {

if (pgd != INIT_MM_PGD && (!TRAMP_PGD || pgd != TRAMP_PGD) || rkp_deferred_inited) {

rkp_policy_violation("only allowed on kerenl PGD or tramp PDG! l1t : %lx", pgd);

return -1;

}

} else {

if (ID_MAP_PGD == pgd)

return 0;

}

rkp_phys_map_lock(pgd);

if (is_alloc) {

if (is_phys_map_l1(pgd)) {

rkp_phys_map_unlock(pgd);

return 0;

}

if (high_bits)

type = KERNEL | L1;

else

type = L1;

res = rkp_phys_map_set(pgd, type);

if (res < 0) {

rkp_phys_map_unlock(pgd);

return res;

}

res = rkp_s2_page_change_permission(pgd, 0x80, 0, 0);

if (res < 0) {

uh_log('L', "rkp_l1pgt.c", 63, "Process l1t failed, l1t addr : %lx, op : %d", pgd, 1);

rkp_phys_map_unlock(pgd);

return res;

}

} else {

if (!is_phys_map_l1(pgd)) {

rkp_phys_map_unlock(pgd);

return 0;

}

res = rkp_phys_map_set(pgd, FREE);

if (res < 0) {

rkp_phys_map_unlock(pgd);

return res;

}

res = rkp_s2_page_change_permission(pgd, 0, 1, 0);

if (res < 0) {

uh_log('L', "rkp_l1pgt.c", 80, "Process l1t failed, l1t addr : %lx, op : %d", pgd, 0);

rkp_phys_map_unlock(pgd);

return res;

}

}

offset = 0;

entry = 0;

start_addr = high_bits << 39;

do {

desc_p = pgd + entry;

desc = *desc_p;

if ((desc & 3) != 3) {

if (desc)

set_pxn_bit_of_desc(desc_p, 1);

} else {

addr = start_addr & 0xFFFFFF803FFFFFFF | offset;

res += rkp_l2pgt_process_table(desc & 0xFFFFFFFFF000, addr, is_alloc);

if (!(start_addr >> 39))

set_pxn_bit_of_desc(desc_p, 1);

}

entry += 8;

offset += 0x40000000;

start_addr = addr;

} while (entry != 0x1000);

rkp_phys_map_unlock(pgd);

return res;

}

rkp_l1pgt_process_table does the following:

- if it is given a PGD for kernel space (

TTBR1_EL1);

- it must be

tramp_pg_dir or swapper_pg_dir;

- it must also not be deferred initialized.

- if it is given a PGD for user space (

TTBR0_EL1);

- if it is

idmap_pg_dir, it returns early.

- then for both kernel and user space;

- if the PGD is being retired;

- it checks in the physmap that it is indeed a PGD;

- it marks it as free in the physmap;

- it makes it RWX in stage 2 (not allowed if initialized).

- if the PGD is being introduced;

- it checks that is not already marked as a PGD in the physmap;

- it marks it as such in the physmap;

- it makes it RO in stage 2 (not allowed if initialized).

- then in both cases, for each entry of the PGD;

- for blocks, it sets their PXN bit;

- for tables, it calls

rkp_l2pgt_process_table on them;

- it also sets their PXN bit if VA < 0x8000000000.

Second Level

Processing of the second level tables in done by the rkp_l2pgt_process_table function.

int64_t rkp_l2pgt_process_table(int64_t pmd, uint64_t start_addr, uint32_t is_alloc) {

// ...

if (!(start_addr >> 39)) {

if (!pmd_allocated_by_rkp) {

if (page_allocator_is_allocated(pmd) == 1)

pmd_allocated_by_rkp = 1;

else

pmd_allocated_by_rkp = -1;

}

if (pmd_allocated_by_rkp == -1)

return 0;

}

rkp_phys_map_lock(pmd);

if (is_alloc) {

if (is_phys_map_l2(pmd)) {

rkp_phys_map_unlock(pmd);

return 0;

}

if (start_addr >> 39)

type = KERNEL | L2;

else

type = L2;

res = rkp_phys_map_set(pmd, (start_addr >> 23) & 0xFF80 | type);

if (res < 0) {

rkp_phys_map_unlock(pmd);

return res;

}

res = rkp_s2_page_change_permission(pmd, 0x80, 0, 0);

if (res < 0) {

uh_log('L', "rkp_l2pgt.c", 98, "Process l2t failed, %lx, %d", pmd, 1);

rkp_phys_map_unlock(pmd);

return res;

}

} else {

if (!is_phys_map_l2(pmd)) {

rkp_phys_map_unlock(pgd);

return 0;

}

if (table_addr >= 0xFFFFFF8000000000)

rkp_policy_violation("Never allow free kernel page table %lx", pmd);

if (is_phys_map_kernel(pmd))

rkp_policy_violation("Entry must not point to kernel page table %lx", pmd);

res = rkp_phys_map_set(pmd, FREE);

if (res < 0) {

rkp_phys_map_unlock(pgd);

return 0;

}

res = rkp_s2_page_change_permission(pmd, 0, 1, 0);

if (res < 0) {

uh_log('L', "rkp_l2pgt.c", 123, "Process l2t failed, %lx, %d", pmd, 0);

rkp_phys_map_unlock(pgd);

return 0;

}

}

offset = 0;

for (i = 0; i != 0x1000; i += 8) {

addr = offset | start_addr & 0xFFFFFFFFC01FFFFF;

res += check_single_l2e(pmd + i, addr, is_alloc);

offset += 0x200000;

}

rkp_phys_map_unlock(pgd);

return res;

}

rkp_l2pgt_process_table does the following:

- if

pmd_allocated_by_rkp is 0 (default value);

- if the PMD was allocated by RKP page allocator, set it to 1;

- otherwise, set

pmd_allocated_by_rkp to -1.

- if the VA < 0x8000000000 and

pmd_allocated_by_rkp is -1, return early;

- if the PMD is being retired;

- it checks in the physmap that it is indeed a PMD;

- it double-checks that is not a kernel PMD;

- it marks it as free in the physmap;

- it makes it RWX in stage 2 (not allowed if initialized).

- if the PMD is being introduced;

- it checks that is not already marked as a PMD in the physmap;